Procedural Podracer (January - May 2014)

I loved my pseudo 3D PlayStation2 podracing game. It was the first project that I had done that made me feel at all capable. It was self proof that I could push myself to make something that was at the forefront of my capabilities. When I realised that the second semester masters graphic module I was doing was just a rehash of the module I did in 3rd year, I was initially annoyed. But I decided to use it as an opportunity to compare my progress over the span of 2 years. The module focuses on creating an application with procedural generation and post processing effects. It starts off with different basic terrain techniques such as faulting, mid-point displacement and particle deposition. I'd already done that of course, as you can see in my honours project page and one of my first blog posts. So I decided to instead think of what I could do for my coursework. As awesome as procedural planets are, I didn't really want to do that 3 times in a row. I then thought back to my podracing game. What if I could make a "next gen" version. With better graphics and more features? Well that would probably be crazy. I worked tirelessly on the PS2 game but didn't give as much attention to the accompanying coursework. There's no way I could do that now, I was trying to give as much effort to all the work that I was doing, which was a lot of bloody work. This was unsettling for me because I knew that I'd now set an ambitious target for myself and if I don't meet that, I'll be disappointed in my work.

I decided to just crack on with it and hope that I was able to achieve it in the end.

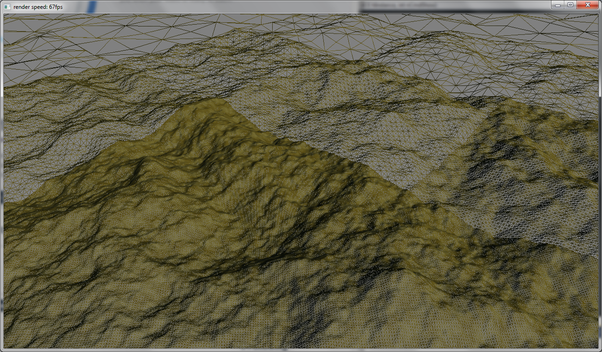

As it's a land racer, I actually was able to revist some of the third year terrain stuff. I remember learning the hardway the exact purpose of an index buffer when I ran white noise through my terrain to find it broke apart. I also remembered how happy I was with myself when I created the terrain patch as intended. This time had to be better. Last time, I was able to specify the width and length of the patch in squares. This time, I had it so that you specified the measurements for the patch and then the density of both the width and length separately. I also made it so that the patch was correctly UV mapped, which made it look instantly more impressive than my vertex coloured patch. As I was creating the terrain, I realised I could take advantage of the function of index buffers to create different levels of detail. All this functionality can be seen in my video demonstrating such.

I decided to just crack on with it and hope that I was able to achieve it in the end.

As it's a land racer, I actually was able to revist some of the third year terrain stuff. I remember learning the hardway the exact purpose of an index buffer when I ran white noise through my terrain to find it broke apart. I also remembered how happy I was with myself when I created the terrain patch as intended. This time had to be better. Last time, I was able to specify the width and length of the patch in squares. This time, I had it so that you specified the measurements for the patch and then the density of both the width and length separately. I also made it so that the patch was correctly UV mapped, which made it look instantly more impressive than my vertex coloured patch. As I was creating the terrain, I realised I could take advantage of the function of index buffers to create different levels of detail. All this functionality can be seen in my video demonstrating such.

The next thing that I knew I had to have in my terrain was correctly calculated normals. This was something I didn't have at all in my 3rd year terrain. When I got this in (and added in Perlin noise) the terrain started coming along really nicely. The pace I was moving at was enthralling! One of the things that I really wish I'd managed to implement into my honours project was collision with the terrain. It wouldn't have been too difficult to implement, I'd need to calculate where a ray from the camera to the centre of the planet intercepted the sphere and then work out the displacement from the noise function used. I could have then also used that functionality to improve the camera culling, but alas I ran out of time. This time however, I simply needed to query the 2D position and get the height. This was made marginally more complicated when I created an overall terrain manager which creates patches and allowed me to make much bigger terrain as well as move it about. You can see my placeholder running along the landscape here. I then went on to change the terrain model to use the ridged multifractal technique. From my honours project, I learnt that it not only creates rocky/sandy terrain but also it actually runs faster at higher octaves than the fractal Brownian motion, which for me is a win-win.

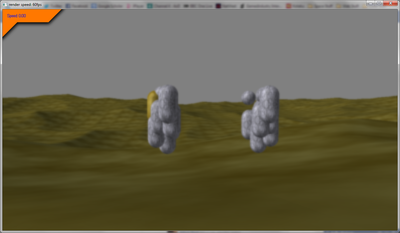

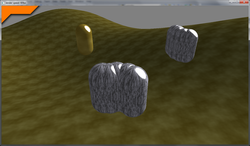

I knew I wanted to go all out on the procedural generation. Not just the terrain but also the track and even the podracers themselves. How cool would it be to have different podracers each time, but actually be able to get the same on using the same seed (because pseudo random is the best random). When first diving into this, I realised a flaw in my framework as it stood. Last semester, when I made the framework in a whole 3 weeks, I didn't have to worry about many models. In fact, each model was directly attached to the object that renders, ergo I couldn't instance the same model multiple times. This meant I had to do a pretty hefty overhaul of the way I was rendering models. Fortunately I managed this without too much fuss. I then got cracking on with creating a podracing object at which point I realised just how bloody complex they were going to be. But I carried on and created an initial concept that I was pretty pleased with. You could pass in models for the pod and the engines and it would generated them in random positions. My favourite part is the fact the engines mirror each other as can be seen in the picture below.

I knew I wanted to go all out on the procedural generation. Not just the terrain but also the track and even the podracers themselves. How cool would it be to have different podracers each time, but actually be able to get the same on using the same seed (because pseudo random is the best random). When first diving into this, I realised a flaw in my framework as it stood. Last semester, when I made the framework in a whole 3 weeks, I didn't have to worry about many models. In fact, each model was directly attached to the object that renders, ergo I couldn't instance the same model multiple times. This meant I had to do a pretty hefty overhaul of the way I was rendering models. Fortunately I managed this without too much fuss. I then got cracking on with creating a podracing object at which point I realised just how bloody complex they were going to be. But I carried on and created an initial concept that I was pretty pleased with. You could pass in models for the pod and the engines and it would generated them in random positions. My favourite part is the fact the engines mirror each other as can be seen in the picture below.

I was getting more and more excited as this progress was in the space of only a couple of weeks and that was alongside all the other projects that I was working on. The more difficult part was the controls for the pod. I knew how I wanted it to control but making that a reality wasn't very easy. I wanted it so that you could control each individual engine. Accelerating one and decelerating the other would be the method for making you turn. You could also rotate the engine to strafe. Combining these would produce different ways to turn although it was certainly a slight mindfuck. They controls I feel would be better suited to a game controller, but I can't let extra shiny things distract me when the project is already so crazy big.

Once I had the podracer up and running, I knew I needed to stave off the procedural generation, which I was obviously quite comfortable with, and instead make sure I had the other half of the application implemented: post processing. This was definitely the biggest shortfall of my 3rd year project. The planet was awesome but it would have been much better if I'd managed to implement proper high dynamic range lighting instead of a hacked bloom effect. I went into implementing post processing into my framework confident that I fully understood it. When presented with a blackscreen, I figured that I perhaps didn't. After looking into it further, I managed to get my staple post process to render: greyscale. Simple to implement but gives a very definite indication that the post process is working. I've a video of when I first got the scene to render to a texture and then the greyscale itself. I remembered seeing someone in 3rd year created a cool looking effect when they shifted the RGB values. I figured this couldn't be that difficult so had a crack at it and was met with success. The next task was multiple stages of post processes. This again took a bit of work, but I eventually made it so that it rendered the scene, the had a horizontal blur and then a vertical blur applied to it which creates a pretty nice blur effect (The two pass method) although it is quite subtle due to the resolution of the screen, down-sampling would make it much more apparent. Below are some screenshots of the different effects.

Due to an error, as can be read about on my blog, I wasn't able to create a motion blur effect as I had desired. Refusing to be defeated, I applied a different method which pleasingly did work. I combined the motion blur with altering the camera's field of view and even attaching two particle emitters to the engines to kick up dust. These combined effects managed to improve the sense of speed without having to actually alter the speed value. I swapped the order of the particles so that the older particles are drawn first. This increased the likelihood of the particles being drawn in the correct order, however I decided to fill out the trail more and as a result, some particles are drawn after particles that are infront of them have been drawn (and so due to depth testing, some parts are cut out). However, I felt the effect I was after had still been achieved and given the crazily small amount of time I had left, I decided to leave it as it was for the time being. I posted up a video of when I got the trails in as well as another to show the added effect of the motion blur and field of view adjustment.

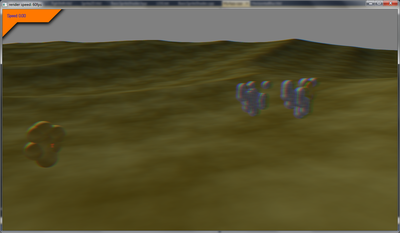

Due to time constraints the AI was even more dumb than the PS2 version, the other racers literally just go forward full blast with random full speeds... But it created a good enough effect to show what it'd be like having people flying along side you.

Due to time constraints the AI was even more dumb than the PS2 version, the other racers literally just go forward full blast with random full speeds... But it created a good enough effect to show what it'd be like having people flying along side you.

What did I actually do?

I created a racing game in DirectX 11 where the terrain, track and racers are all procedurally generated. There are also post-processing effects applied. Built using my own developed framework, I created all main the components myself.