Procedural planet - The Prequel

I love space. There's not a week goes by that something new is discovered or worked out about the vast cosmos that us Earthlings inhabit. I struggle to comprehend how others cannot be perplexed by the many bizarre things floating around outside.

When our graphics module moved onto procedural generation, I was excited. I love creating worlds. Not necessarily planets, but worlds that organisms could inhabit. In second year, for our parallel programming module, I created a simulator where plants grew randomly and an animal would search for these plants to feed on to survive. I loved creating this and then just letting it play out. I expanded on this idea a couple of years later by creating a genetic algorithm based simulator, where you can set the initial population, the density of food and how long the simulation should aim to run, then I let it go.

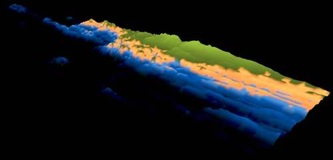

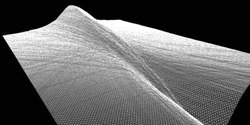

The idea of procedural generation is an exciting one because it expands what I can create visually. I loved being able to read my output on the console as to what was happening, but it would be even cooler to see the actual events happen. The first thing I did was create a plane and applied a faulting method to it. This was cool and created what could be perceived as a mountain, seen in the image to the right. This started off with straight lines, but then I got creative and added curved lines, such as parabolas. I then edited the basic shader I had been using so that the vertices were coloured based on their height:

When our graphics module moved onto procedural generation, I was excited. I love creating worlds. Not necessarily planets, but worlds that organisms could inhabit. In second year, for our parallel programming module, I created a simulator where plants grew randomly and an animal would search for these plants to feed on to survive. I loved creating this and then just letting it play out. I expanded on this idea a couple of years later by creating a genetic algorithm based simulator, where you can set the initial population, the density of food and how long the simulation should aim to run, then I let it go.

The idea of procedural generation is an exciting one because it expands what I can create visually. I loved being able to read my output on the console as to what was happening, but it would be even cooler to see the actual events happen. The first thing I did was create a plane and applied a faulting method to it. This was cool and created what could be perceived as a mountain, seen in the image to the right. This started off with straight lines, but then I got creative and added curved lines, such as parabolas. I then edited the basic shader I had been using so that the vertices were coloured based on their height:

This not only looked pretty cool, but it actually ran dynamically in real time. It would start off completely flat and then ground would emerge and then sink back again. This went back to what I love to be able to do, create worlds. I was watching as a world was being created, aging. This was awesome but what if I could make an entire planet?

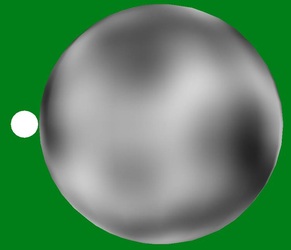

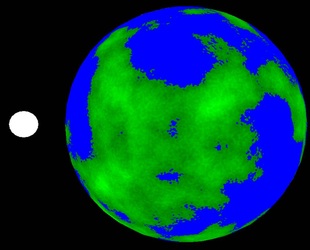

For the rest of the module, that was just what I did. I learnt about Perlin noise and it's uses and applied it to a sphere to get the base for creating a planet. While it was beyond my abilities to create the actual land geometry, I was aiming to mimic the structure of the planet by procedurally texturing the sphere. It wasn't long before I had a clear land/water split on the go. I wasn't satisfied with just that however, so I added little details. First I added coasts: I clamped the water colour at a certain value so that near the coast would be lighter. I then realised I could reuse the procedural texture for the land to create "clouds". This however hid the coasts and so I dropped them.

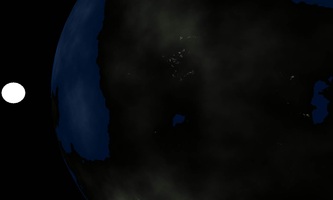

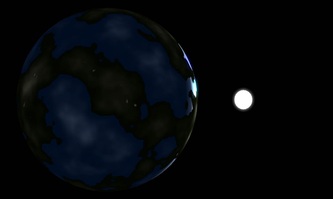

This still wasn't enough, I knew I could do something pretty cool with the procedural texture, I wanted nightlife. It meant using a newly created procedural texture but it was worth it. I ended up with cities appearing on the dark side of the planet. The final touch was to add post processing. I added a bloom effect so that the accompanying sun was more convincing. I unfortunately didn't have time left to implement HDR lighting so I just faked it by setting a slight threshold for the bloom effect. The result was something I was really pleased with. There is level of detail implemented into it as well so that it doesn't do more calculations than it needs to.

For the rest of the module, that was just what I did. I learnt about Perlin noise and it's uses and applied it to a sphere to get the base for creating a planet. While it was beyond my abilities to create the actual land geometry, I was aiming to mimic the structure of the planet by procedurally texturing the sphere. It wasn't long before I had a clear land/water split on the go. I wasn't satisfied with just that however, so I added little details. First I added coasts: I clamped the water colour at a certain value so that near the coast would be lighter. I then realised I could reuse the procedural texture for the land to create "clouds". This however hid the coasts and so I dropped them.

This still wasn't enough, I knew I could do something pretty cool with the procedural texture, I wanted nightlife. It meant using a newly created procedural texture but it was worth it. I ended up with cities appearing on the dark side of the planet. The final touch was to add post processing. I added a bloom effect so that the accompanying sun was more convincing. I unfortunately didn't have time left to implement HDR lighting so I just faked it by setting a slight threshold for the bloom effect. The result was something I was really pleased with. There is level of detail implemented into it as well so that it doesn't do more calculations than it needs to.

Procedural planets - Research project

Having a textured sphere that looks like a planet was pretty awesome. But I wanted more. I wanted to fly past mountain peaks. That wasn't going to be easy.

I decided to take advantage of the recently introduced DirectX 11 tessellation stages to create adaptable geometry. But that would mean learning how to use them. I'd also need to learn about mapping a cube to a sphere (though that was rather simple) and utilising quadtrees (so that could get increasing detail). This was no simple task. I chose DirectX 11 over OpenGL because I already had a base framework to work from. Or so I thought. I quickly learnt that Id be using the effects framework while I was building from scratch.

Regardless, it wasn't long before I was starting to have something on screen. I managed to get a sphere from a cube surprisingly quickly. The next step was getting the sphere's faces to act as if they were a 2D plane for the quadtree structures. I've videos of these steps on my vimeo, one showing the conversion to a sphere and the other showing the detail possible through using quadtrees.

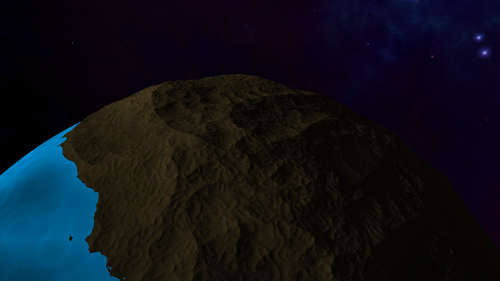

So I had the basis for creating lots of useful triangles, but now I wanted to displace those triangles. I started off using the basic fBm that I had from 3rd year. Great, good looking coasts and it's 3D this time! However, it was tricky to see the details of the land I was still using spherical lighting at this point, I needed to get the normals of the altered vertices. That's not particularly easy during the tessellation stage as it's not possible to know where the other vertices are (because some haven't been created and moved yet). But I realised I could just created pseudo vertices and move them as they would eventually be moved. This meant I was tripling the computation and so I only calculate the vertex normals. It would be more complicated to work out the normals of the fragments and I was running out of time.

I decided to take advantage of the recently introduced DirectX 11 tessellation stages to create adaptable geometry. But that would mean learning how to use them. I'd also need to learn about mapping a cube to a sphere (though that was rather simple) and utilising quadtrees (so that could get increasing detail). This was no simple task. I chose DirectX 11 over OpenGL because I already had a base framework to work from. Or so I thought. I quickly learnt that Id be using the effects framework while I was building from scratch.

Regardless, it wasn't long before I was starting to have something on screen. I managed to get a sphere from a cube surprisingly quickly. The next step was getting the sphere's faces to act as if they were a 2D plane for the quadtree structures. I've videos of these steps on my vimeo, one showing the conversion to a sphere and the other showing the detail possible through using quadtrees.

So I had the basis for creating lots of useful triangles, but now I wanted to displace those triangles. I started off using the basic fBm that I had from 3rd year. Great, good looking coasts and it's 3D this time! However, it was tricky to see the details of the land I was still using spherical lighting at this point, I needed to get the normals of the altered vertices. That's not particularly easy during the tessellation stage as it's not possible to know where the other vertices are (because some haven't been created and moved yet). But I realised I could just created pseudo vertices and move them as they would eventually be moved. This meant I was tripling the computation and so I only calculate the vertex normals. It would be more complicated to work out the normals of the fragments and I was running out of time.

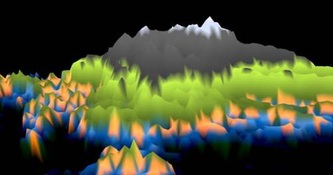

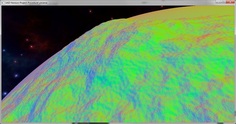

Rendering the normals highlighted that, while it may create convincing coastlines, the fBm didn't create very good mainland. It just looks bubbly. So I looked into other methods and fell in love with multifractals. Unfortunately, it still wasn't good enough on it's own. It created great mountain peaks, however the surface looks like sand dunes. Additionally, the coastlines are sharp and in fact, there is no ocean, just large lakes. I was surprised to discover, as part of my research, that the multifractal actually performed noticeably better than the other methods I attempted. As such, it was a no-brainer as to which method to show off.

Due to the heavy computation being performed, I wasn't able to get the city lights to work as I would like so I had to drop them and of course, the clouds would've looked rather out of place if rendered on the ground. I had high ambitions at the start to try to implement atmospheric light scattering which also never made it in. Looking back, I made a damn impressive tech demo. Being a perfectionist however leaves me dissatisfied, I wanted a traversable universe but only ended up with a single solar system. At least, it's a rather pretty image:

Due to the heavy computation being performed, I wasn't able to get the city lights to work as I would like so I had to drop them and of course, the clouds would've looked rather out of place if rendered on the ground. I had high ambitions at the start to try to implement atmospheric light scattering which also never made it in. Looking back, I made a damn impressive tech demo. Being a perfectionist however leaves me dissatisfied, I wanted a traversable universe but only ended up with a single solar system. At least, it's a rather pretty image:

If you would like to read my full Honours research paper on procedural generation, please contact me via the contact form.

What did I actually do?

I did a research project into procedural generation, specifically for creating planets. I wanted to look at the uses of procedural generation. I created an application alongside my paper to help me explore the topics I was researching. To create the application, I learnt how to utilise the DirectX 11 tessellation stages; I learnt how to create a quadtree structure using recursive function calls, I expanded my shader programming knowledge and I expanded my general object orientated design knowledge.