So I've got normals added to the vertices. As I went to write this, I was preparing the following pictures:

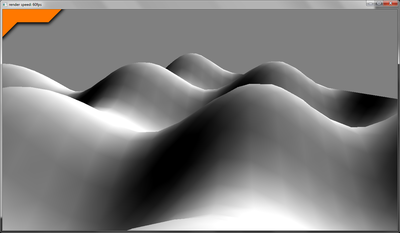

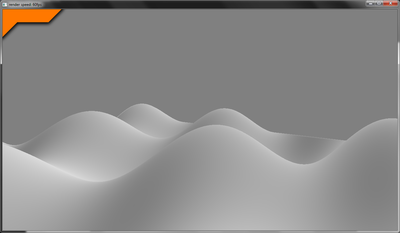

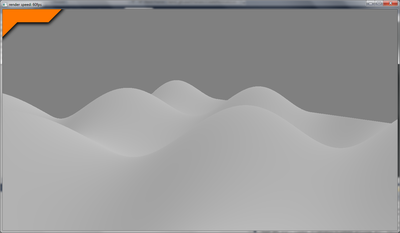

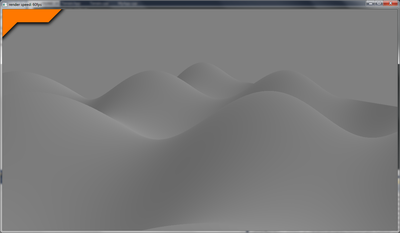

What the show is the apparent discrepancy between the detail of the terrain and the normals generated. The first patch consisted of 64 x 64 quads and the normals are close to what I want, although it is obvious it's low quality. The following two images show a 512 x 512 patch and a 1024 x 1024 patch respectively and the lighting that those patches generate. While they are much smoother, the normals appear to be fading as it gains more detail. This was really confusing me, I could intensify the lighting in the shader, as I have done in the fourth and final image, but as the image shows, this can effect the parts that should be lighter too.

However, as I went to put up this post I realised what I had done. As ever, it was a silly error on my part. Before calculating the normals, I put in the function that I knew I would call and filled it with a placeholder which was to set all the vertices to point up. I then rendered the terrain with the basic light shader to make sure that it was rendering correctly. I then sat down tonight to implement the normal calculations (which actually only took an hour). However, I left in the original normal and then added the other calculated normals on top of this. This isn't much of a problem at lower detail as the length of the normals calculated are about equal. The issue though is when I'm creating normals from much smaller triangles, as their normals are much smaller, therefore when I add them all up, including the one that is (0,1,0), it's going to get more and more biased towards pointing up.

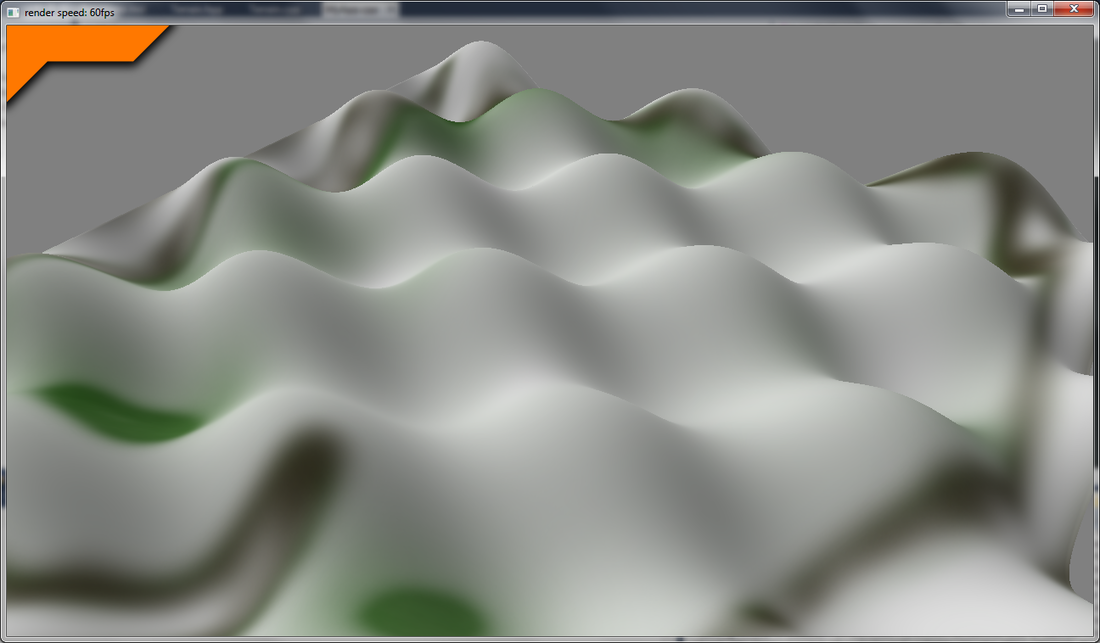

Putting this into practice confirmed what I had thought. Goodness I can be an idiot at times. Below is an image of it rendering properly (with the texture added back in).

Putting this into practice confirmed what I had thought. Goodness I can be an idiot at times. Below is an image of it rendering properly (with the texture added back in).

My next step is to implement improved noise. If I continue with a static mesh for my terrain, I am going to have to pass in an offset vector so that the noise is calculated correctly. Otherwise, when I create my patches and then align them up, they'll just be repeated and more than likely won't connect up. I think this is the approach I want to take as it will save on CPU time because static vertex buffers are optimised to run better than dynamic ones. However, it may be further down the line I'm limited too much by these static terrains. As it is, I could only create a new one if I closed the application and reopened it. However, I might create a terrain manager that creates the patches using heap memory and which can regenerate by releasing those resources and creating a new set. This would have a spike in performance but help it run faster for the majority of the time.

RSS Feed

RSS Feed